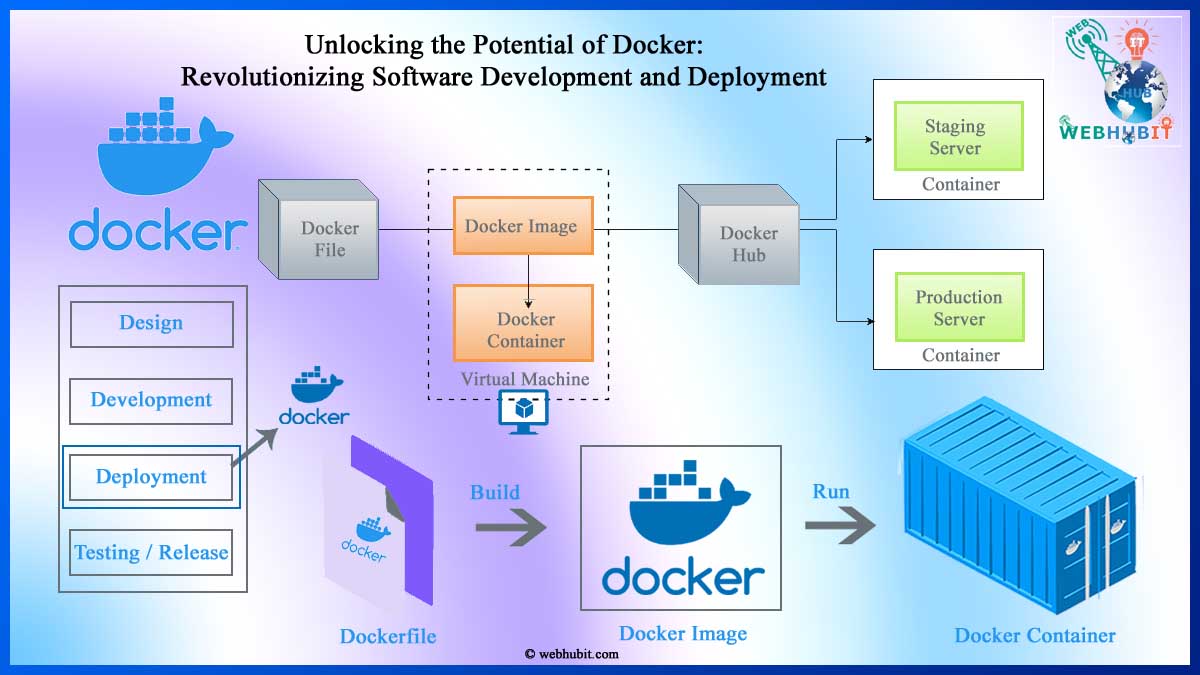

In the ever-evolving landscape of software development, the quest for efficient, scalable, and reliable deployment solutions has been a constant challenge. Enter Docker, a transformative platform that is reshaping the way developers build, ship, and run applications. In this comprehensive exploration, we’ll delve into the intricacies of Docker, uncovering its core concepts, advantages, and real-world applications.

Understanding Docker: A Journey into Containerization

At its essence, Docker is a containerization platform that empowers developers to encapsulate applications and their dependencies into lightweight, portable containers. These containers operate independently of the host system, providing a consistent environment for running applications across diverse infrastructure.

The magic of Docker lies in its ability to package everything an application needs – code, runtime, libraries, and dependencies – into a single container image. Unlike traditional virtual machines, which require separate operating systems for each instance, Docker containers share the host system’s kernel, resulting in faster startup times and reduced resource overhead.

The Docker Ecosystem: A Tapestry of Innovation

Central to the Docker ecosystem is Docker Engine, the runtime environment responsible for executing containers. Docker Engine abstracts away the complexities of containerization, providing developers with a streamlined interface for building, running, and managing containers.

Complementing Docker Engine is Docker Hub, a cloud-based registry that serves as a repository for Docker images. Here, developers can discover, share, and collaborate on containerized applications, leveraging a vast library of pre-built images to expedite their development workflows.

Furthermore, Docker Compose simplifies the orchestration of multi-container applications, allowing developers to define complex deployments with a declarative YAML syntax. With Docker Compose, managing interconnected services and configuring networking and storage resources becomes a breeze, enabling faster iteration and innovation.

Advantages of Docker: Empowering Developers, Transforming Organizations

The adoption of Docker confers a multitude of benefits for organizations seeking to streamline their software development and deployment processes. Foremost among these advantages is portability. Docker containers encapsulate everything needed to run an application, ensuring consistency across different environments. This portability facilitates seamless deployment across development, testing, and production environments, reducing the risk of compatibility issues and accelerating time-to-market.

Moreover, Docker promotes scalability by enabling the rapid deployment and scaling of containerized applications. With Docker Swarm or Kubernetes, organizations can orchestrate container clusters, dynamically allocating resources based on demand. This elasticity allows for more efficient resource utilization and greater flexibility in responding to fluctuating workloads.

In the realm of continuous integration and continuous deployment (CI/CD), Docker plays a pivotal role in automating the software delivery pipeline. By integrating Docker into CI/CD workflows, organizations can automate the building, testing, and deployment of applications, accelerating time-to-market and enhancing overall agility. Furthermore, Docker’s immutable infrastructure model ensures that deployments are predictable and rollback-ready, minimizing downtime and mitigating risks.

Real-World Applications: From Development to Production

The versatility of Docker extends across various stages of the software development lifecycle. During development, developers can use Docker to create isolated development environments that mirror production, eliminating the notorious “it works on my machine” syndrome. By defining development environments as code, teams can ensure consistency and reproducibility, streamlining collaboration and debugging processes.

In production, Docker empowers organizations to deploy and manage complex microservices architectures with ease. By containerizing individual components of an application, organizations can achieve greater agility, scalability, and resilience. Moreover, Docker’s ecosystem of tools, including Docker Swarm, Kubernetes, and Prometheus, provides robust solutions for orchestrating, monitoring, and scaling containerized workloads in production environments.

Challenges and Considerations: Navigating the Docker Landscape

While Docker offers compelling advantages, it is not without its challenges. Managing containerized environments at scale requires careful consideration of security, resource allocation, and networking configurations. Organizations must implement robust container security practices, including image scanning, access control, and vulnerability management, to mitigate potential threats.

Additionally, optimizing resource utilization and orchestrating container clusters efficiently demand expertise in container orchestration platforms like Docker Swarm or Kubernetes. Organizations must invest in training and upskilling their teams to harness the full potential of Docker and containerization technologies.

The Future of Docker: Innovations on the Horizon

As Docker continues to evolve, we can expect to see advancements in areas such as container networking, storage, and observability. Technologies like Docker Desktop, Docker Enterprise, and Docker App are poised to further streamline development and deployment workflows, catering to the diverse needs of modern enterprises.

Moreover, Docker’s integration with emerging technologies like serverless computing and edge computing holds the promise of unlocking new frontiers in application deployment and delivery. By embracing a container-centric approach, organizations can future-proof their infrastructure and stay ahead in an ever-changing technological landscape.

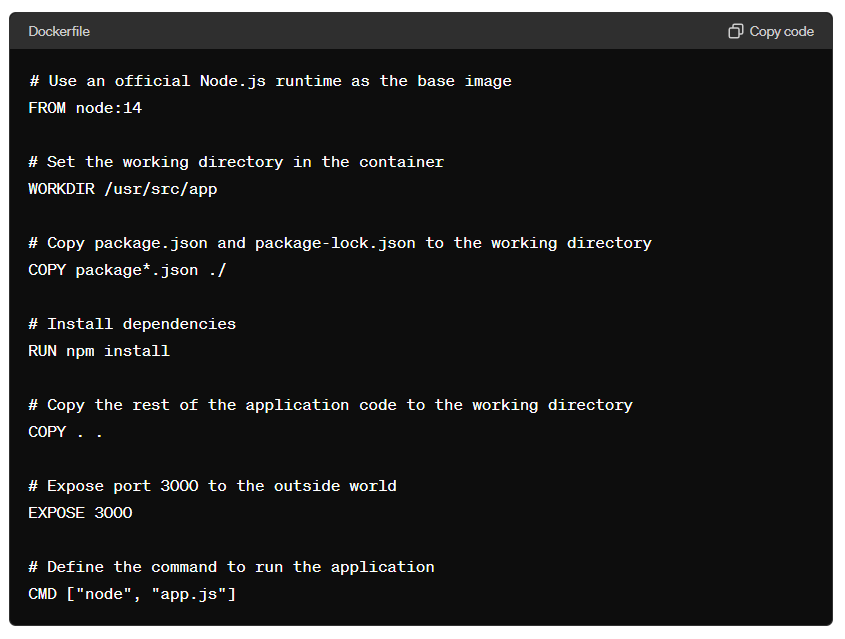

Simple example of a Dockerfile for a Node.js application:

In this Dockerfile:

- We start by selecting a Node.js runtime image from Docker Hub, an established repository of Docker images. This image provides the foundational environment for our application.

- Following that, we designate a working directory within the container, setting it as ‘

/usr/src/app‘. This directory will house all the files and directories associated with our application. - After setting up the workspace, we proceed to copy the ‘

package.json‘ and ‘package-lock.json‘ files into this directory. These files are critical as they list all the dependencies our application relies on. Subsequently, we execute ‘npm install‘ to download and install these dependencies within the container. - Once the dependencies are successfully installed, we proceed to copy the remaining files and directories comprising our application into the workspace directory. This includes all the necessary files to execute our Node.js application.

- Furthermore, we specify that our application will be accessible through port 3000. This port acts as the entry point for external services or users wishing to interact with our application.

- Finally, we define the command to be executed by Docker upon container startup. In this instance, we instruct Docker to run ‘

node app.js‘, thereby initiating our Node.js application.

This Dockerfile serves as a comprehensive guide for constructing a Docker image tailored specifically for a Node.js application. Once the Dockerfile is finalized, the ‘docker build‘ command can be utilized to assemble the image, while the ‘docker run‘ command can be employed to commence a container based on said image.

Conclusion: Embracing the Docker Revolution

In conclusion, Docker represents a paradigm shift in software deployment, offering unparalleled agility, scalability, and efficiency. By adopting Docker, organizations can accelerate innovation, streamline operations, and deliver value to customers at scale. As Docker continues to gain traction, staying abreast of best practices and emerging trends will be key to harnessing its full potential. So, are you ready to embark on the Docker journey and unlock the future of software deployment?